serhii.net

In the middle of the desert you can say anything you want

-

Day 1913 (27 Mar 2024)

More latex tricks for spacing and references

\autorefis like\refbut it adds the word, not just the number.3.2->Figure 3.2: cross referencing - What’s the difference between \ref and \autoref? - TeX - LaTeX Stack Exchangej

-

Day 1902 (16 Mar 2024)

Latex trivial TODO command

Wrapping stuff in this command makes it stand out; also greppable by TODO which removes the need to remember commands

\newcommand{\TODO}[1]{{\color{magenta}#1}}

-

Day 1900 (14 Mar 2024)

Locally debugging Huggingface Dataset scripts

Previously:

Is there a suggested way of debugging dataset generators? - 🤗Datasets - Hugging Face Forums

Instead of committing etc. every time, one can clone the dataset path locally through git and then point

load_dataset()to that local folder with the dataset script file!

-

Day 1899 (13 Mar 2024)

Huggingface Hub prefers zip archives because they support streaming

Random nugget from Document to compress data files before uploading · Issue #5687 · huggingface/datasets:

- gz, to compress individual files

- zip, to compress and archive multiple files; zip is preferred rather than tar because it supports streaming out of the box

(Streaming: https://huggingface.co/docs/datasets/v2.4.0/en/stream TL;DR don’t download the entire dataset for very large datasets, add

stream=trueto theload_dataset()fn)

-

Day 1877 (20 Feb 2024)

Latex has paragraphs and subparagraphs

Til from NASA’s (!) docs1 that there are two sub-levels after

subsubsection:\subsubsection{Example Sub-Sub-Section} \label{sec:example-subsubsection} \ref{sec:example-subsubsection} is an example of \texttt{subsubsection}. \paragraph{Example Paragraph} \label{sec:example-paragraph} \ref{sec:example-paragraph} is an example of \texttt{paragraph}. \subparagraph{Example Sub-Paragraph} \label{sec:example-subparagraph} \ref{sec:example-subparagraph} is an example of \texttt{subparagraph}.I so needed them!

Things I'll do different next time when creating datasets

-

Day 1875 (18 Feb 2024)

Huggingface dataset build configs

Goal: create multiple dataset configs for 231203-1745 Masterarbeit LMentry-static-UA task.

- Example: datasets/templates/new_dataset_script.py at main · huggingface/datasets

- Tutorial: Builder classes

- One example they give: https://huggingface.co/datasets/frgfm/imagenette/blob/main/imagenette.py

Developing:

- One can in

_URLSprovide paths to local files as well, to speed up development!

It’s not magic dictionaries, it’s basically syntax known to me (with Features etc.) which is neat!

elif self.config.name == "WhichWordWrongCatTask": yield key, { "question": data["question"], "correctAnswer": data["correctAnswer"], "options": data["additionalMetadata_all_options"] # "second_domain_answer": "" if split == "test" else data["second_domain_answer"], }Ah, dataset viewer not available :( But apparently one can use manual configs and then it works: https://huggingface.co/docs/hub/datasets-manual-configuration

I can use https://huggingface.co/datasets/scene_parse_150/edit/main/README.md as an example here.

dataset_info: - config_name: scene_parsing features: - name: image dtype: image - name: annotation dtype: image - name: scene_category dtype: class_label: names: '0': airport_terminal '1': art_gallery '2': badlands - config_name: instance_segmentation features: - name: image dtype: image - name: annotation dtype: image… This shows WISTask in the viewer, but not LOWTask (because

'str' object has no attribute 'items')configs: - config_name: LOWTask data_files: "data/tt_nim/LOWTask.jsonl" features: - name: question dtype: string - name: correctAnswer dtype: string default: true - config_name: WISTask data_files: "data/tt_nim/WISTask.jsonl"And I can’t download either with python because

Traceback (most recent call last): File "/home/sh/.local/lib/python3.8/site-packages/datasets/builder.py", line 1873, in _prepare_split_single writer.write_table(table) File "/home/sh/.local/lib/python3.8/site-packages/datasets/arrow_writer.py", line 568, in write_table pa_table = table_cast(pa_table, self._schema) File "/home/sh/.local/lib/python3.8/site-packages/datasets/table.py", line 2290, in table_cast return cast_table_to_schema(table, schema) File "/home/sh/.local/lib/python3.8/site-packages/datasets/table.py", line 2248, in cast_table_to_schema raise ValueError(f"Couldn't cast\n{table.schema}\nto\n{features}\nbecause column names don't match") ValueError: Couldn't cast question: string correctAnswer: string templateUuid: string taskInstanceUuid: string additionalMetadata_kind: string additionalMetadata_template_n: int64 additionalMetadata_option_0: string additionalMetadata_option_1: string additionalMetadata_label: int64 additionalMetadata_t1_meta_pos: string additionalMetadata_t1_meta_freq: int64 additionalMetadata_t1_meta_index: int64 additionalMetadata_t1_meta_freq_quantile: int64 additionalMetadata_t1_meta_len: int64 additionalMetadata_t1_meta_len_quantile: string additionalMetadata_t1_meta_word_raw: string additionalMetadata_t2_meta_pos: string additionalMetadata_t2_meta_freq: int64 additionalMetadata_t2_meta_index: int64 additionalMetadata_t2_meta_freq_quantile: int64 additionalMetadata_t2_meta_len: int64 additionalMetadata_t2_meta_len_quantile: string additionalMetadata_t2_meta_word_raw: string additionalMetadata_reversed: bool additionalMetadata_id: int64 system_prompts: list<item: string> child 0, item: string to {'question': Value(dtype='string', id=None), 'correctAnswer': Value(dtype='string', id=None), 'templateUuid': Value(dtype='string', id=None), 'taskInstanceUuid': Value(dtype='string', id=None), 'additionalMetadata_kind': Value(dtype='string', id=None), 'additionalMetadata_template_n': Value(dtype='int64', id=None), 'additionalMetadata_all_options': Sequence(feature=Value(dtype='string', id=None), length=-1, id=None), 'additionalMetadata_label': Value(dtype='int64', id=None), 'additionalMetadata_main_cat_words': Sequence(feature=Value(dtype='string', id=None), length=-1, id=None), 'additionalMetadata_other_word': Value(dtype='string', id=None), 'additionalMetadata_cat_name_main': Value(dtype='string', id=None), 'additionalMetadata_cat_name_other': Value(dtype='string', id=None), 'additionalMetadata_id': Value(dtype='int64', id=None), 'system_prompts': Sequence(feature=Value(dtype='string', id=None), length=-1, id=None)} because column names don't match The above exception was the direct cause of the following exception: Traceback (most recent call last): File "test.py", line 18, in <module> ds = load_dataset(path, n) File "/home/sh/.local/lib/python3.8/site-packages/datasets/load.py", line 1797, in load_dataset builder_instance.download_and_prepare( File "/home/sh/.local/lib/python3.8/site-packages/datasets/builder.py", line 890, in download_and_prepare self._download_and_prepare( File "/home/sh/.local/lib/python3.8/site-packages/datasets/builder.py", line 985, in _download_and_prepare self._prepare_split(split_generator, **prepare_split_kwargs) File "/home/sh/.local/lib/python3.8/site-packages/datasets/builder.py", line 1746, in _prepare_split for job_id, done, content in self._prepare_split_single( File "/home/sh/.local/lib/python3.8/site-packages/datasets/builder.py", line 1891, in _prepare_split_single raise DatasetGenerationError("An error occurred while generating the dataset") from e datasets.builder.DatasetGenerationError: An error occurredwhile generating the datasetOh goddammit. Relevant:

- pytorch - Load dataset with datasets library of huggingface - Stack Overflow

- python - Huggingface datasets ValueError - Stack Overflow is an issue because he loaded it locally

- machine learning - Huggingface Load_dataset() function throws “ValueError: Couldn’t cast” - Stack Overflow also Ukrainian

I give up.

Back to the script.

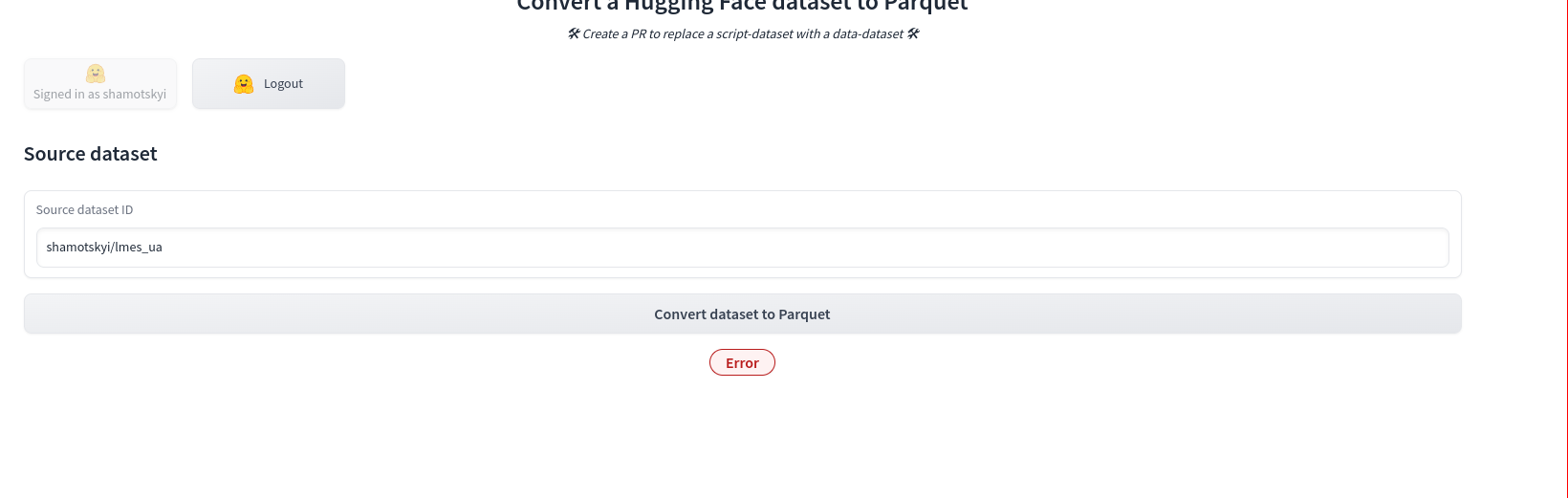

Last thing I’ll try (as suggested by tau/scrolls · Dataset Viewer issue: DatasetWithScriptNotSupportedError):

Convert Dataset To Parquet - a Hugging Face Space by albertvillanova

…

feels so unsatisfying not to see the datasets in the viewer :(

tau/scrolls · Dataset Viewer issue: DatasetWithScriptNotSupportedError this feels like something relevant to me. We’ll see.

python random sample vs random choices

Got bit by this.

random — Generate pseudo-random numbers — Python 3.12.2 documentation

- SAMPLE() (

random.sample()) IS WITHOUT REPLACEMENT: no duplicates unless present in list (random.shuffle()) - CHOICES() (

random.choices()) IS WITH REPLACEMENT: duplicates MAY happen.

Also:

random.shuffle()works in-place. Sampling len(x) is a way to shuffle immutable lists.

JSONL to JSON conversion with jq

jq -s '.' input.jsonl > output.json jq -c '.[]' input.json > output.jsonl

-

Day 1872 (15 Feb 2024)

LMentry improving words and sentences by frequency

Word frequencies

- oprogramador/most-common-words-by-language: List of the most common words in many languages links to https://raw.githubusercontent.com/hermitdave/FrequencyWords/master/content/2016/uk/uk_50k.txt

- of-fucking-course:

я 116180 не 99881 в 53280 что 45257 ты 38282 на 37762 що 34824 и 34712 это 33254 так 31178

- of-fucking-course:

- most-common-words-multilingual/data/wordfrequency.info/uk.txt at main · frekwencja/most-common-words-multilingual similar to the above but much better, without ыs.

- ssharoff/robust: Robust frequency estimates for word lists has nice info about filtering wordlists etc to make them ‘robust’

- Includes a link to Ukrainian: http://corpus.leeds.ac.uk/frqc/robust/wikipedia-uk-robust.tsv

- … that I can’t get to connect

- Includes a link to Ukrainian: http://corpus.leeds.ac.uk/frqc/robust/wikipedia-uk-robust.tsv

- Where can I find the list of Ukrainian words ordered by frequency of use? : r/Ukrainian

- MOVA.info

- I see no way to download their corpora: Корпус української мови MOVA.info

- MOVA.info

- Find some way to get one of the old/cool/known big ukr corpora

- Dictionary of the Ukrainian Language

- nice! But not directly usable. But very nice.

- Oder?.. dmklinger/ukrainian: English to Ukrainian dictionary

- YES!

words.json

- One of its sources:

- Download – Dbnary

- HA, it’s a really awesome place worth looking at further!

- Download – Dbnary

- nice! But not directly usable. But very nice.

Anyway - found the perfect dictionary. Wooho.

- oprogramador/most-common-words-by-language: List of the most common words in many languages links to https://raw.githubusercontent.com/hermitdave/FrequencyWords/master/content/2016/uk/uk_50k.txt