serhii.net

In the middle of the desert you can say anything you want

-

Day 2410 (07 Aug 2025)

fish arguments and functions

- argparse - parse options passed to a fish script or function — fish-shell 4.0.2 documentation

- function - create a function — fish-shell 4.0.2 documentation

Below:

- h/help is a flag

- o/output-dir gets+requires a value

or returnfails on unknown-a/--args- If found, the value is removed from

$argvand put into_flag_argname set -query (tests if var is set)-local

argparse h/help o/output-dir= -- $argv or return if set -ql _flag_help echo "Usage: script.fish [-o/--output-dir <dir>] <input_files_or_directory>" echo "Example: script.fish --output-dir /path/to/output file1.png directory_with_pngs/ dir/*.png" exit 0 end if set -ql _flag_output_dir set output_dir $_flag_output_dir end for arg in $argv # do something with file $arg end

-

Day 2407 (04 Aug 2025)

Using a private gitlab repository with uv

Scenario:

- your uv source package (that lives in a gitlab source project) depends on a target package from a gitlab target project’s package registry.

- you want

uv addetc. to work transparently - you want gitlab CI/CD pipelines to work transparently

Links

TL;DR

- Have a gitlab token with at least

read_apiscope and Developer+ role - Find the address of the registry through Gitlab UI

https://__token__:glpat-secret-token@gitlab.de/api/v4/projects/1111/packages/pypi/simplehttps://gitlab.de/api/v4/projects/1111/packages/pypi/simple- FROM A GROUP1:

https://gitlab.example.com/api/v4/groups/<group_id>/-/packages/pypi/simple ${CI_API_V4_URL}/projects/${CI_PROJECT_ID}/packages/pypi

- pyproject: add it registry, see below

- to authenticate UV, you can use:

- token inside URI, or

UV_INDEX_PRIVATE_REGISTRY_USERNAME/PASSWORDenv variables, replacing PRIVATE_REGISTRY with the name you gave to it in pyproject.toml~/.netrcfile: .netrc - everything curl

Gitlab

- In Gitlab, use/create an access token with read_xxx permissions in the project —

read_api, read_repository, read_registryare enough — and Developer role. - To get the package registry address,

- Use this URI for the group registry (it’s the best for multiple projects in the same group):

https://gitlab.example.com/api/v4/groups/<group_id>/-/packages/pypi/simple- You can get the group ID from the group page, hamburger menu in upper-right, “copy group id”. Will be an int.

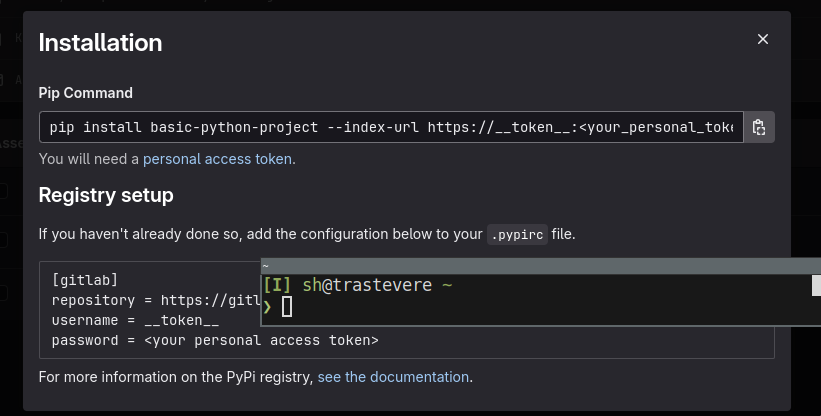

- open the registry, click on a package, click “install” and you’ll see it all:

- different packages in different projects will have different registries!

- The project repository URI is generally

${CI_API_V4_URL}/projects/${CI_PROJECT_ID}/packages/pypi/simple

- Use this URI for the group registry (it’s the best for multiple projects in the same group):

UV

Add the new package registry as index

tool.uv.index name = "my-registry" url = "https://__token__:glpat-secret-token@gitlab.de/api/v4/projects/1111/packages/pypi/simple" # authenticate = "always" # see below # ignore-error-codes = [401]The URI either contains token inside URI or doesn’t. The examples below are /projects/xxx, ofc a group registry works as well. -

https://__token__:glpat-secret-token@gitlab.de/api/v4/projects/1111/packages/pypi/simple- token inside the URI -https://gitlab.de/api/v4/projects/1111/packages/pypi/simple— auth happening through env. variables or~/.netrcUv auth

Through the server URI

url = "https://__token__:glpat-secret-token@gitlab.de/api/v4/projects/1111/packages/pypi/simple"Through env variables:

export UV_INDEX_PRIVATE_REGISTRY_USERNAME=__token__ export UV_INDEX_PRIVATE_REGISTRY_PASSWORD=glpat-secret-tokenPRIVATE_REGISTRYneeds to be replaced with the name of the registry . So e.g. for the pyproject above it’sUV_INDEX_**MY_REGISTRY**_USERNAME.Through a .netrc file

From Authentication | uv / HTTP Authentication and PyPI packages in the package registry | GitLab Docs: Create a

~/.netrc:machine gitlab.example.com login __token__ password <personal_token>It will use these details, when you

uv add ... -vyou’d see a line likeDEBUG Checking netrc for credentials for https://gitlab.de/api/v4/projects/1111/packages/pypi/simple/packagename/ DEBUG Found credentials in netrc file for https://gitlab.de/api/v4/projects/1111/packages/pypi/simple/packagename/NB

gitwill also use these credentials — so if the token’s scope doesn’t allow e.g. pushing, you won’t be able togit push. Use a wider scope or a personal access token (or env. variables)Usage

- When you

uv add yourpackage, uv looks for packages in all registries- The usual

pypione is on by default

- The usual

- If gitlab doesn’t find one in the gitlab registry or the user is unauthenticated, gitlab by default transparently mirrors pypi

- If auth fails for any of the indexes, uv will fail loudly — you can

ignore-error-codes = [401]to make uv keep looking inside the other registries

Gitlab CI/CD pipelines

CI/CD pipelines have to have access to the package as well, when they run.

GitLab CI/CD job token | GitLab Docs:

You can use a job token to authenticate with GitLab to access another group or project’s resources (the target project). By default, the job token’s group or project must be added to the target project’s allowlist.

In the target project (the one that needs to be resolved, the one with the private registry), in Settings->CI/CD -> Job token permissions add the source project (the one that will access the packages during CI/CD).

You can just add the group parent of all projects as well, then you don’t have to add any individual ones.

Then

$CI_JOB_TOKENcan be used to access the target projects. For example, through a ~/.netrc file (note the username!)machine gitlab.example.com username gitlab-ci-token password $CI_JOB_TOKENBonus round: gitlab-ci-local

I love firecow/gitlab-ci-local.

When running gitlab-ci-local things, the

CI_JOB_TOKENvariable is empty. You can create a.gitlab-ci-local-variables.yaml(don’t forget to gitignore it!) with this variable, it’ll get used automatically and your local CI/CD pipelines will run as well:CI_JOB_TOKEN=glpat-secret-token(or just `gitlab-ci-local –variable CI_JOB_TOKEN=glpat-secret-token your-command)

-

Day 2403 (31 Jul 2025)

jj Jujutsu first impressions

Tutorial and bird’s eye view - Jujutsu docs Git replacement using git under the hood.

First encountered here: Jujutsu for busy devs | Hacker News / Jujutsu For Busy Devs | maddie, wtf?!

For anyone who’s debating whether or not jj is worth learning, I just want to highlight something. Whenever it comes up on Hacker News, there are generally two camps of people: those who haven’t given it a shot yet and those who evangelize it.

Alright, let’s try!

To use on top of an existing git repository:

jj git init --colocate . jj git clone --colocate git@github.com:maddiemort/maddie-wtf.gitCommand line completions:

COMPLETE=fish jj | source

-

Day 2395 (23 Jul 2025)

Firefox awesomeness

How to Firefox | Hacker News / 🦊 How to Firefox - Kaushik Gopal’s Website

- Type

/and start typing for quick find (vs ⌘F). But dig this,'and Firefox will only match text for hyper links - URL bar search shortcuts:

*for bookmarks,%for open tabs,^for history - If you have an obnoxious site disable right click, just hold Shift and Firefox will bypass and show it to you. No add-one required.

(emph mine)

Damn. DAMN.

I need to set this up in qutebrowser as well, it’s brilliant.

- Type

-

Day 2383 (11 Jul 2025)

LLM inference handbook production

For later: Introduction | LLM Inference in Production

-

Day 2359 (17 Jun 2025)

Autorandr has neat short virtual configurations

autorandr -c vertical-reversedescribes my home layout,autorandr -c horizontaldescribes my work layout. Awesome.The following virtual configurations are available: off Disable all outputs common Clone all connected outputs at the largest common resolution clone-largest Clone all connected outputs with the largest resolution (sca- led down if necessary) horizontal Stack all connected outputs horizontally at their largest re- solution vertical Stack all connected outputs vertically at their largest reso- lution horizontal-reverse Stack all connected outputs horizontally at their largest re- solution in reverse order vertical-reverse Stack all connected outputs vertically at their largest reso- lution in reverse order

-

Day 2347 (05 Jun 2025)

Evaluation harnesses list and notes

Previously:

- more generic: 250317-1847 Current LLM evaluation landscape

- 250505-1019 Evaluating RAG

- 250415-1640 Evaluating NLG

EleutherAI lm-eval-harness

Link: EleutherAI/lm-evaluation-harness: A framework for few-shot evaluation of language models.

Running

Running HF model with model args (hf model name in

model_argsas well):lm_eval --model hf \ --model_args pretrained=EleutherAI/pythia-160m,revision=step100000,dtype="float" \ --tasks lambada_openai,hellaswag \ --device cuda:0 \ --batch_size 8Task formats

YAML+jinja, can run python code in some of the params.

task: coqa dataset_path: EleutherAI/coqa output_type: generate_until training_split: train validation_split: validation doc_to_text: !function utils.doc_to_text doc_to_target: !function utils.doc_to_target process_results: !function utils.process_results should_decontaminate: true doc_to_decontamination_query: "{{story}} {{question.input_text|join('\n')}}" generation_kwargs: until: - "\nQ:" metric_list: - metric: em aggregation: mean higher_is_better: true - metric: f1 aggregation: mean higher_is_better: trueFeatures

- Supports HF models/datasets

- Jinja templates, separate packages for IFeval, promptsource etc.

- Many options for post-processing, answer extraction, fewshot etc. the usual, all live in the YAML

Inference

- HF, incl. local, OpenAI API format, but other frameworks as well — nvidia nemo etc.

- Llama.cpp / vLLM

- multi-GPU with accelerate:

accelerate launch -m lm_eval --model ...

Saving and caching

- JSON through

--output_pathparam --log_sampleslogs samples--use-cachecaches stuff and reruns it only when needed--hf_hub_log_argslogs the results to HF ! (documentation broken though)

Misc

- Last (most complex) version of HF Hub leaderboard (before it got discontinued): lm-evaluation-harness/lm_eval/tasks/leaderboard/README.md at main · EleutherAI/lm-evaluation-harness

- Python integration:

simple_evaluate(): lm-evaluation-harness/docs/interface.md at main · EleutherAI/lm-evaluation-harness

lmms-eval

- EvolvingLMMs-Lab/lmms-eval: Accelerating the development of large multimodal models (LMMs) with one-click evaluation module - lmms-eval.

- very close in spirit to eval-harness but multimodal

Sample1:

export HF_HOME="~/.cache/huggingface" export AZURE_OPENAI_API_KEY="" export AZURE_OPENAI_API_BASE="" export AZURE_OPENAI_API_VERSION="2023-07-01-preview" # pip install git+https://github.com/EvolvingLMMs-Lab/lmms-eval.git python3 -m lmms_eval \ --model openai_compatible \ --model_args model_version=gpt-4o-2024-11-20,azure_openai=True \ --tasks mme,mmmu_val \ --batch_size 1Task yamls look very similar: lmms-eval/lmms_eval/tasks/gqa/gqa.yaml at main · EvolvingLMMs-Lab/lmms-eval

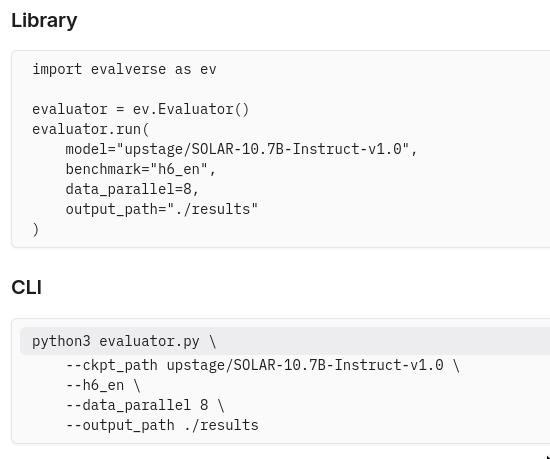

Evalverse

- Evalverse | Evalverse Docs

- Looks abandoned

- One package to run multiple eval frameworks:

- lm-eval for usual benchmarks

- Chat: evaluated using FastChat llm-as-a-judge

- MT-Bench

- IFEval

- EQ-Bench

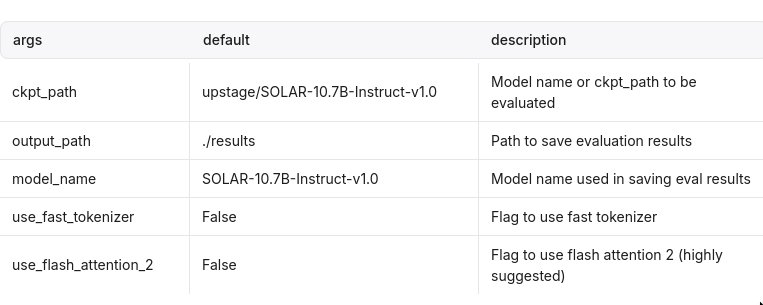

- Their params:Evaluation | Evalverse Docs

- also “Common Arguments” (hah)

- And then arguments for each benchmark separately

- also “Common Arguments” (hah)

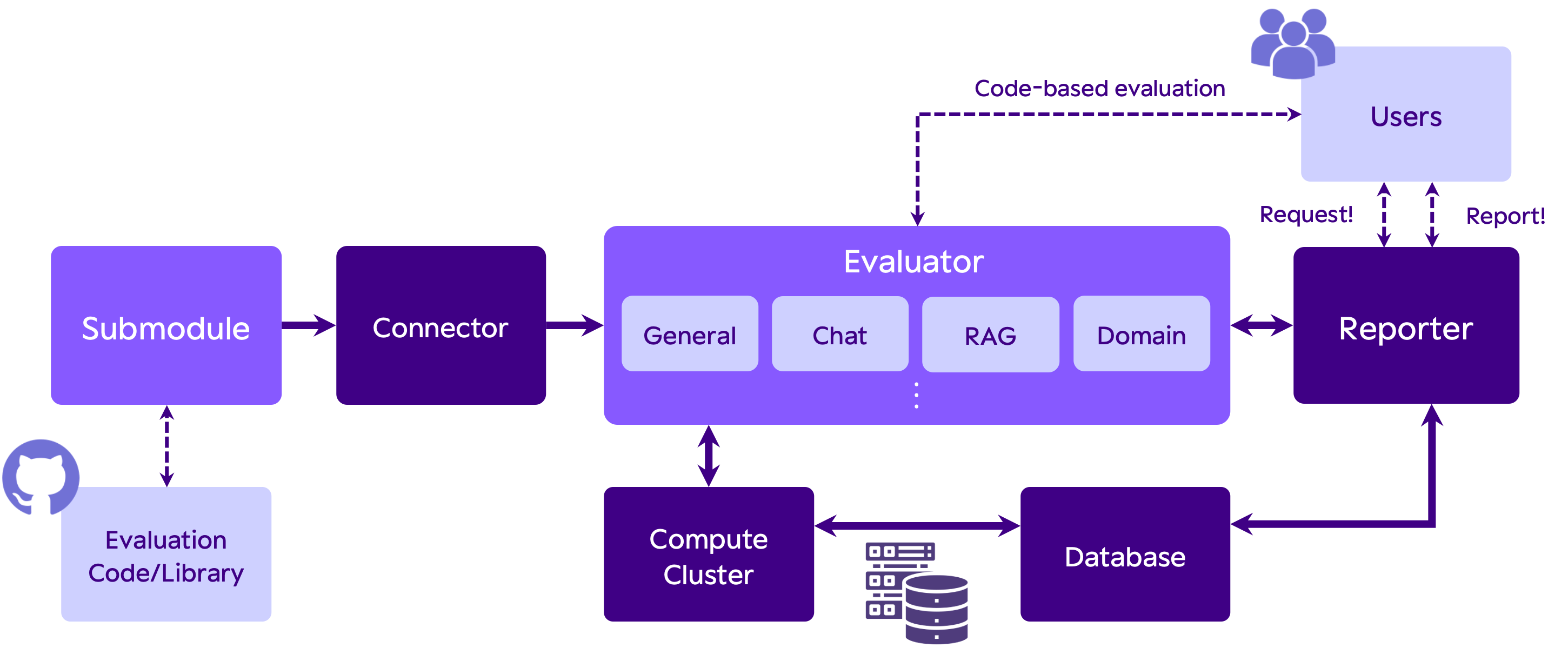

Architecture

Evaluator runs the library given by the Connector

Running

(h6_en is lm-eval)

(h6_en is lm-eval)Results

- JSON, one for each task.

- They have a Reporter class that summarizes and analyzes the results saved by the evaluator

OpenAI evals

- Link: openai/evals: Evals is a framework for evaluating LLMs and LLM systems, and an open-source registry of benchmarks.

- I like their documentation!

Running

oaieval gpt-3.5-turbo test-matchTask formats

Data

Data has to be in JSONL format.

{ "input": [ { "role": "system", "content": "You are an assistant with knowledge of U.S. state laws. Answer the questions accurately." }, { "role": "user", "content": "List the states where adultery is technically illegal. Only provide a list of states with no explanation." } ], "ideal": "Alabama, Arizona, Florida, Idaho, Illinois, Kansas, Michigan, Minnesota, Mississippi, New York, North Carolina, Oklahoma, Rhode Island, South Carolina, Virginia, Wisconsin, Georgia" }Building an eval

Registering the eval2:

<eval_name>: id: <eval_name>.dev.v0 description: <description> metrics: [accuracy] <eval_name>.dev.v0: class: evals.elsuite.basic.match:Match args: samples_jsonl: <eval_name>/samples.jsonlEval templates

- evals/docs/eval-templates.md at main · openai/evals templates to make task creation easier

- e.g. basic: match/fuzzy-match/..

- model-graded: use a model to parse the completion into an easily parsable shape, e.g. yes/no/maybe.

Model-graded templates

Sample yaml:3

humor_likert: prompt: |- Is the following funny? {completion} Answer using the scale of 1 to 5, where 5 is the funniest. choice_strings: "12345" choice_scores: from_strings input_outputs: input: completionclosedqa: config_dict = yaml.load(yaml_path.read_text()) prompt: |- You are assessing a submitted answer on a given task based on a criterion. Here is the data: [BEGIN DATA] *** [Task]: {input} *** [Submission]: {completion} *** [Criterion]: {criteria} *** [END DATA] Does the submission meet the criterion? First, write out in a step by step manner your reasoning about the criterion to be sure that your conclusion is correct. Avoid simply stating the correct answers at the outset. Then print only the single character "Y" or "N" (without quotes or punctuation) on its own line corresponding to the correct answer. At the end, repeat just the letter again by itself on a new line. Reasoning: eval_type: cot_classify choice_scores: "Y": 1.0 "N": 0.0 choice_strings: 'YN' input_outputs: input: "completion"- Includes

- closedqa (is the answer given in the prompt?)

- battle.yaml: pairwise choice basically

- Notably, I don’t really see a way to do multiple choice using their basic eval templates, a la lm-eval

doc_to_choice.

Inference

- Completion functions: evals/docs/completion-fns.md at main · openai/evals

- generalization of “completion”, e.g. also to allow the model to open a browser or whatever

langchain/llm/text-davinci-003: class: evals.completion_fns.langchain_llm:LangChainLLMCompletionFn args: llm: OpenAI llm_kwargs: model_name: text-davinci-003 langchain/llm/flan-t5-xl: class: evals.completion_fns.langchain_llm:LangChainLLMCompletionFn args: llm: HuggingFaceHub llm_kwargs: repo_id: google/flan-t5-xlModel types

Not immediately easily exposed, definitely supports OpenAI and LangChain and HF, but it’s not intuitive.

HF Lighteval

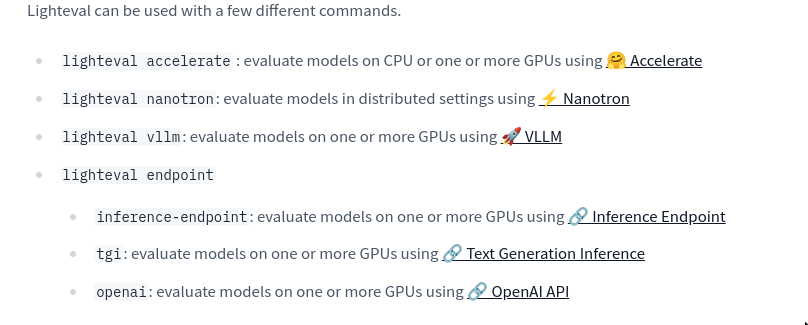

Running

lighteval accelerate \ "model_name=openai-community/gpt2" \ "leaderboard|truthfulqa:mc|0|0"The syntax:

{suite}|{task}|{num_few_shot}|{0 for strict num_few_shots, or 1 to allow a truncation if context size is too small}Task formats

- Python files: Adding a Custom Task.

- Example template:

lighteval/community_tasks/_template.py - German RAG eval: lighteval/community_tasks/german_rag_evals.py at main · huggingface/lighteval

- Currently implemented tasks: lighteval/src/lighteval/tasks at main · huggingface/lighteval

- Example task: lighteval/examples/custom_tasks_templates/custom_yourbench_task_mcq.py at main · huggingface/lighteval

return Doc( instruction=ZEROSHOT_QA_INSTRUCTION, task_name=task_name, query=ZEROSHOT_QA_USER_PROMPT.format(question=line["question"], options=options), choices=line["choices"], gold_index=gold_index, ) yourbench_mcq = LightevalTaskConfig( name="HF_TASK_NAME", # noqa: F821 suite=["custom"], prompt_function=yourbench_prompt, hf_repo="HF_DATASET_NAME", # noqa: F821 hf_subset="lighteval", hf_avail_splits=["train"], evaluation_splits=["train"], few_shots_split=None, few_shots_select=None, generation_size=8192, metric=[Metrics.yourbench_metrics], trust_dataset=True, version=0, )Features

Inference

Model types

Many, and model configs are yamls: lighteval/examples/model_configs at main · huggingface/lighteval. For example: lighteval/examples/model_configs/litellm_model.yaml at main · huggingface/lighteval

model_parameters: model_name: "openai/deepseek-ai/DeepSeek-R1-Distill-Qwen-32B" provider: "openai" base_url: "https://router.huggingface.co/hf-inference/v1" generation_parameters: temperature: 0.5 max_new_tokens: 256 top_p: 0.9 seed: 0 repetition_penalty: 1.0 frequency_penalty: 0.0Misc

Their default prompts: lighteval/src/lighteval/tasks/default_prompts.py at main · huggingface/lighteval

HELM

- stanford-crfm/helm: Holistic Evaluation of Language Models (HELM)

- Their famous leaderboard: Holistic Evaluation of Language Models (HELM)

- Documentation: Index - CRFM HELM

Running

# Run benchmark helm-run --run-entries mmlu:subject=philosophy,model=openai/gpt2 --suite my-suite --max-eval-instances 10 # Summarize benchmark results helm-summarize --suite my-suiteTask formats

Features

Inference

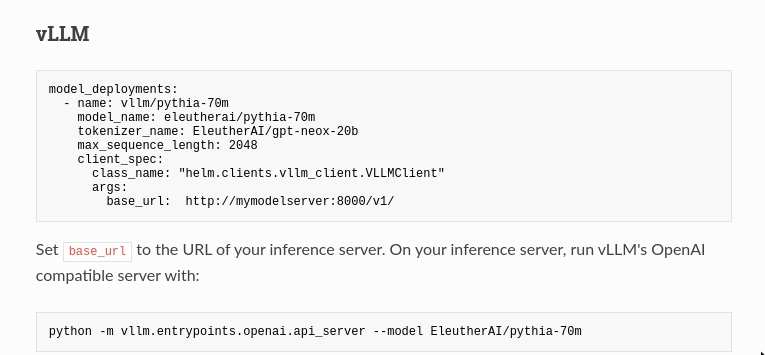

A model has metadata (description) and deployment (actually how to run it / implementation). Adding New Models - CRFM HELM Both are yamls.

HF model deployiment (but running locally!):

- name: huggingface/gemma-2-9b-it model_name: google/gemma-2-9b-it tokenizer_name: google/gemma-2-9b max_sequence_length: 8192 client_spec: class_name: "helm.clients.huggingface_client.HuggingFaceClient" args: device_map: auto torch_dtype: torch.bfloat16I’m not certain how that connects to their Hugging Face Model Hub Integration - CRFM HELM — tl;dr only

AutoModelForCausalLM. To run:helm-run \ --run-entries boolq:model=stanford-crfm/BioMedLM \ --enable-huggingface-models stanford-crfm/BioMedLM \ --suite v1 \ --max-eval-instances 10All (many!) deployments: helm/src/helm/config/model_deployments.yaml at main · stanford-crfm/helm

vLLM example uses a OpenAI-compatible inference server

OpenCompass

- Welcome to OpenCompass’ documentation! — OpenCompass 0.4.2 documentation

- open-compass/opencompass: OpenCompass is an LLM evaluation platform, supporting a wide range of models (Llama3, Mistral, InternLM2,GPT-4,LLaMa2, Qwen,GLM, Claude, etc) over 100+ datasets.

Never heard of it but looks cool! And supports many types of evals.

Subjective Evaluation Guidance — OpenCompass 0.4.2 documentation

All configs in Python! models tasks etc.

Model types

All configs in python!

# model_cfg.py from opencompass.models import HuggingFaceCausalLM models = [ dict( type=HuggingFaceCausalLM, path='huggyllama/llama-7b', model_kwargs=dict(device_map='auto'), tokenizer_path='huggyllama/llama-7b', tokenizer_kwargs=dict(padding_side='left', truncation_side='left'), max_seq_len=2048, max_out_len=50, run_cfg=dict(num_gpus=8, num_procs=1), ) ]OpenAI:

from opencompass.models import OpenAI models = [ dict( type=OpenAI, # Using the OpenAI model # Parameters for `OpenAI` initialization path='gpt-4', # Specify the model type key='YOUR_OPENAI_KEY', # OpenAI API Key max_seq_len=2048, # The max input number of tokens # Common parameters shared by various models, not specific to `OpenAI` initialization. abbr='GPT-4', # Model abbreviation used for result display. max_out_len=512, # Maximum number of generated tokens. batch_size=1, # The size of a batch during inference. run_cfg=dict(num_gpus=0), # Resource requirements (no GPU needed) ), ]OpenCompass VLMEvalKit

Same creators as the above one, multimodal eval.

DeepEval

- confident-ai/deepeval: The LLM Evaluation Framework

- basically unit-test for LLMs — optionally literally using pytest!

- “large amount of metrics” usable with “ANY LLM of your choice”

- includes RAG tool use etc.

- have their free cloud thingy to share reports etc., optional but they push it heavily. Something like wandb — visualize scores compare etc.

- Supports evaluating LlamaIndex | DeepEval

Tasks

From their README:

import pytest from deepeval import assert_test from deepeval.metrics import GEval from deepeval.test_case import LLMTestCase, LLMTestCaseParams def test_case(): correctness_metric = GEval( name="Correctness", criteria="Determine if the 'actual output' is correct based on the 'expected output'.", evaluation_params=[LLMTestCaseParams.ACTUAL_OUTPUT, LLMTestCaseParams.EXPECTED_OUTPUT], threshold=0.5 ) test_case = LLMTestCase( input="What if these shoes don't fit?", # Replace this with the actual output from your LLM application actual_output="You have 30 days to get a full refund at no extra cost.", expected_output="We offer a 30-day full refund at no extra costs.", retrieval_context=["All customers are eligible for a 30 day full refund at no extra costs."] ) assert_test(test_case, [correctness_metric])Models

- the usual ones, including neat HF support k The vLLM bit describes using an openAI-compatible endpoints: vLLM | DeepEval - The Open-Source LLM Evaluation Framework, same as for LMStudio

deepeval set-local-model --model-name=<model_name> \ --base-url="http://localhost:8000/v1/" \ --api-key=<api-key>Misc

- Can do component-based evals through

@observedecorators, “avoiding rewriting your app just for testing” - Supports many vector databases eval for RAG retrieval eval

Other stuff

FastChat MTBench

- FastChat/fastchat/llm_judge at main · lm-sys/FastChat

- pure Python, llm-as-a-judge

- one python script to generate answers, another to get LLM judgements, a third to calculate results and draw plots — neat pattern

- FastChat powers LLM Arena, and also provides an OpenAI-compatible API for the models it supports

Autoevals

- braintrustdata/autoevals: AutoEvals is a tool for quickly and easily evaluating AI model outputs using best practices.

- python+TS, no runner but framework

- API-first, doesn’t try to run local inference, which again I like

- Generally I really like it

Pydantic evals

- Evals - PydanticAI

- Yet another unit tests for LLM approach, flexible, supports LLM-as-a-judge

MORE

Ragas

- explodinggradients/ragas: Supercharge Your LLM Application Evaluations 🚀

- e.g. AspectCritic Metrics - Ragas

-

https://github.com/EvolvingLMMs-Lab/lmms-eval/blob/main/examples/models/openai_compatible.sh: ↩︎

-

https://github.com/openai/evals/blob/main/evals/registry/modelgraded/closedqa.yaml[evals/evals/registry/modelgraded/humor.yaml at main · openai/evals](https://github.com/openai/evals/blob/main/evals/registry/modelgraded/humor.yaml); https://github.com/openai/evals/blob/main/evals/registry/modelgraded/closedqa.yaml ↩︎

-

Day 2345 (03 Jun 2025)

Deploying FastChat locally with litellm and GitHub models

Just some really quick notes on this, it’s pointless and redundant but I’ll need these later

- lm-sys/FastChat: An open platform for training, serving, and evaluating large language models. Release repo for Vicuna and Chatbot Arena.

- LiteLLM Proxy Server (LLM Gateway) | liteLLM

LiteLLM

Create .yaml with a github models model:

model_list: # - model_name: github-Llama-3.2-11B-Vision-Instruct # Model Alias to use for requests - model_name: minist # Model Alias to use for requests litellm_params: model: github/Ministral-3B api_key: "os.environ/GITHUB_API_KEY" # ensure you have `GITHUB_API_KEY` in your .envAfter setting GITHUB_API_KEY,

litellm --config config.yamlFastChat

- Install FastChat, start the controller:

python3 -m fastchat.serve.controller

- FastChat model config:

{ "minist": { "model_name": "minist", "api_base": "http://0.0.0.0:4000/v1", "api_type": "openai", "api_key": "whatever", "anony_only": false } }- Run the webUI

python3 -m fastchat.serve.gradio_web_server_multi --register-api-endpoint-file ../model_config.json- Direct chat now works!

-

Day 2343 (01 Jun 2025)

Переміщення бухгалтерії 1С 1C

TIL about 1C when I had to move it from one windows laptop to another. First and last windows post here hopefully

Ref: 1С – как перенести базу на другой компьютер

Long story short:

- 1C v 7.7 from 1999 can be literally copied to another laptop and it works, windows is good at this.

- the database is also a directory, which is usually shown when you start 1C and choose the DB to open

- that directory can be copied

- I THINK it’s good karma if the path on both computers is identical, but am not certain

- if the version matches that should be it

- no idea how to check if it worked :)